The News

Last week, a summer reading list showed up in the Chicago Sun-Times and the Philadelphia Inquirer recommending books by popular authors to their readers. The problem? Only a portion of the books actually existed. Turns out the list was generated by A.I., and made it to publication without any review of its contents. The list was taken down, with embarrassment and apologies following.

It’s the latest development in the use of AI in the journalism industry, and brings concerns about AI’s purpose in media back into the limelight.

The Trends

AI’s use has taken off in recent years. 65% of outlets report using the technology in their operations, and over half of journalists use it in their reporting tasks like transcribing interviews, creating first drafts, and proofing copy. As the industry has to do more with less, it is leaning on AI more.

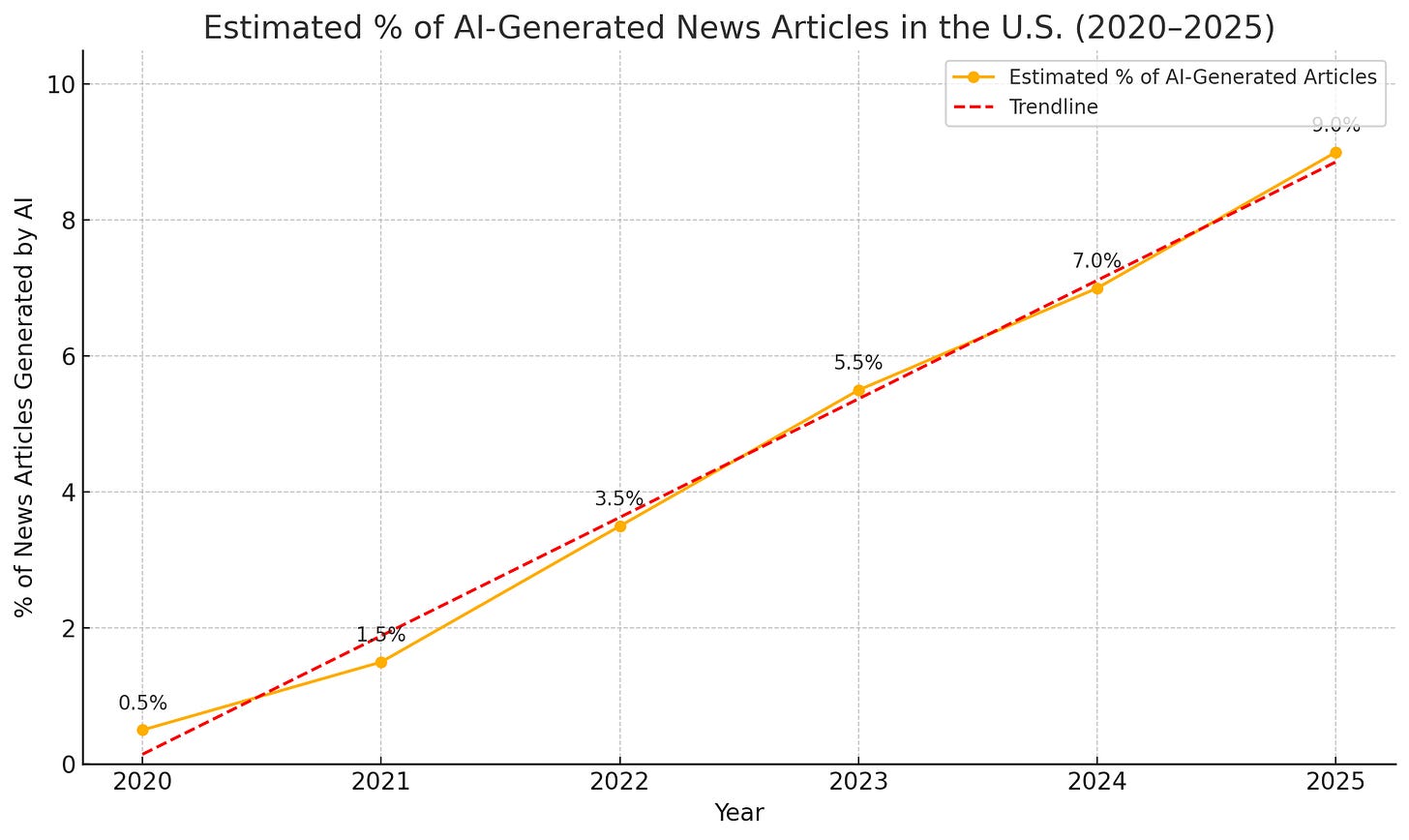

Many news organizations don’t report statistics on how many of their articles are published using AI but Gitnux, a market data and statistics website, suggests that 7% of daily published news content is AI-generated, and will continue to grow to 9% by year end.

What’s Missing

To me, the use of AI isn’t the issue. AI is coming for every industry, whether we like it or not. Concise, AI-generated summaries of events and outlines of the day’s headlines are useful to readers, and AI can help reporters and journalists do their jobs more efficiently.

Yet, with the increase in the use of AI, there hasn’t been nearly a proportionate uptick in editorial monitoring of its output. News outlets are operating with smaller budgets, fewer staff, and immense competition while operating under the threat of consolidation and declining public trust. To survive in a world of AI in the newsroom, outlets should have dedicated personnel to review the content published. The data is scarce as to which publications have tried to do this. Most are busy fighting the use of evolving their business to manage it appropriately. The AI editor is probably the most important hire that news organizations could make in 2025.

The person with the byline of the summer reading list published last week quickly admitted fault and took blame, citing that there were no excuses for the error, yet with only one line of defense, outlets risk missing more instances of bad AI. If the industry as a whole wants to regain public trust, they need to make the necessary moves to fix it.